If you require accommodation to attend this event, please contact us at uchi@uconn.edu or by phone (860) 486-9057. We can request ASL interpreting and other accommodations offered by the Center for Students with Disabilities.

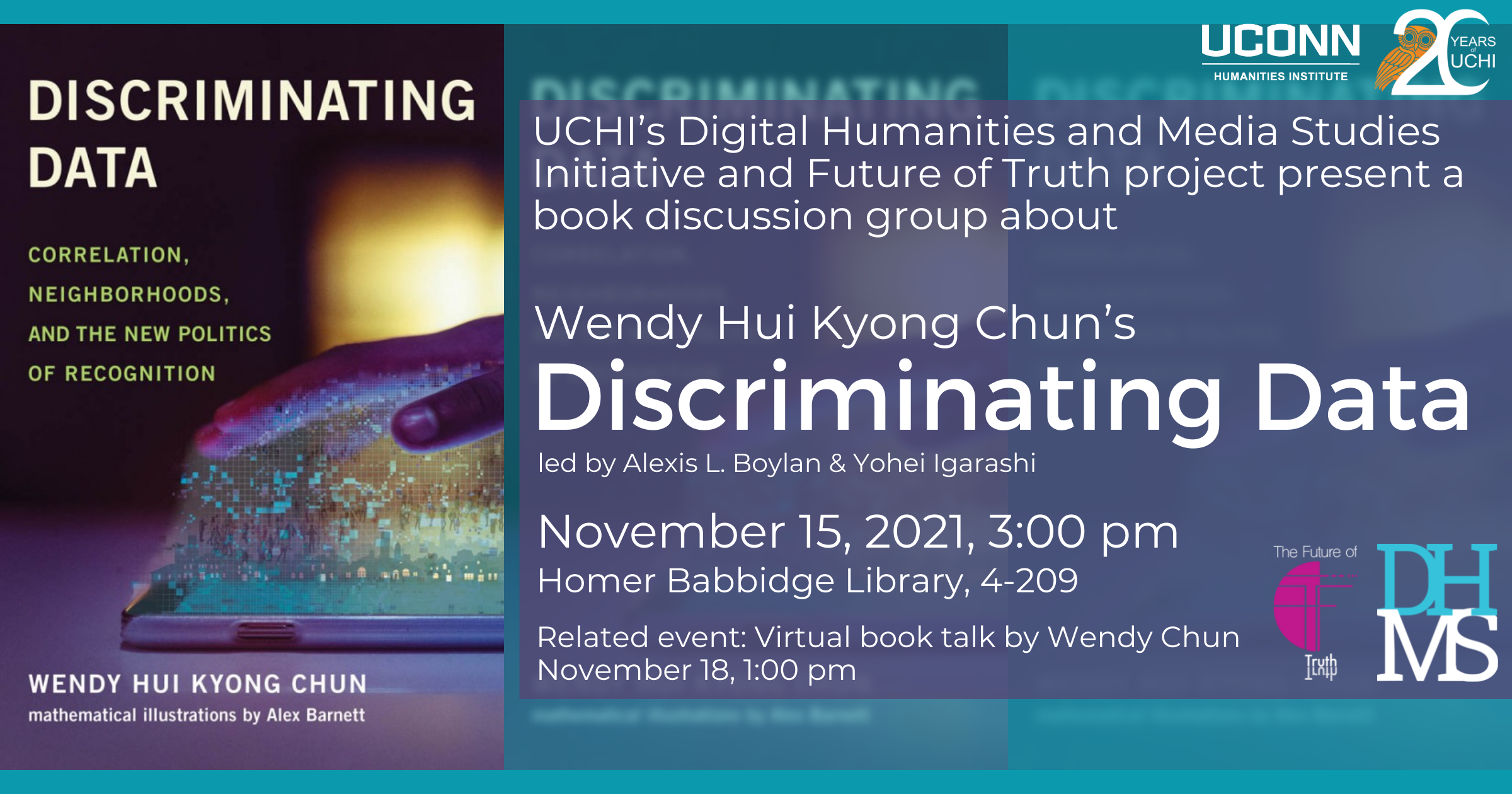

The Digital Humanities and Media Studies Initiative and the Future of Truth project invite you to a book discussion group about:

Discriminating Data

by Wendy Hui Kyong Chun

led by Alexis L. Boylan and Yohei Igarashi

Add to Google calendar. Add to Office 365 calendar. Add to other calendar.

November 15, 2021, 3:00–4:00pm

Homer Babbidge Library, 4-209

To participate, please email uchi@uconn.edu. The first twenty participants to sign up will receive a free copy of Discriminating Data: Correlation, Neighborhoods, and the New Politics of Recognition (MIT Press, 2021).

In Discriminating Data, Wendy Hui Kyong Chun reveals how polarization is a goal—not an error—within big data and machine learning. These methods, she argues, encode segregation, eugenics, and identity politics through their default assumptions and conditions. Correlation, which grounds big data’s predictive potential, stems from twentieth-century eugenic attempts to “breed” a better future. Recommender systems foster angry clusters of sameness through homophily. Users are “trained” to become authentically predictable via a politics and technology of recognition. Machine learning and data analytics thus seek to disrupt the future by making disruption impossible.

Chun, who has a background in systems design engineering as well as media studies and cultural theory, explains that although machine learning algorithms may not officially include race as a category, they embed whiteness as a default. Facial recognition technology, for example, relies on the faces of Hollywood celebrities and university undergraduates—groups not famous for their diversity. Homophily emerged as a concept to describe white U.S. resident attitudes to living in biracial yet segregated public housing. Predictive policing technology deploys models trained on studies of predominantly underserved neighborhoods. Trained on selected and often discriminatory or dirty data, these algorithms are only validated if they mirror this data.

How can we release ourselves from the vice-like grip of discriminatory data? Chun calls for alternative algorithms, defaults, and interdisciplinary coalitions in order to desegregate networks and foster a more democratic big data.

[Book description from MIT Press site]

In conjunction with this event, Wendy Chun will give a virtual book talk on November 18, 2021 at 1:00pm. To attend the talk, register here.